Understanding Broker Cluster and Zookeeper in Kafka: A Beginner’s Guide: Part 4

Apache Kafka is one of the most popular distributed messaging systems used for building real-time data pipelines and streaming applications. One of its key strengths lies in its scalability and fault tolerance, achieved through Kafka’s broker cluster and its dependency on Zookeeper for managing metadata and coordination.

In this blog, we’ll explore how Kafka brokers and Zookeeper work together to form a powerful and reliable messaging system. We’ll break down these concepts step by step, making it easy to understand for newcomers. Whether you’re setting up Kafka for the first time or trying to understand its architecture, this guide will help you get started.

Overview of Kafka Architecture

Before diving into brokers and Zookeeper, it’s important to have a general understanding of Kafka’s architecture:

- Kafka Topic: A category to which records are sent. Topics are divided into partitions to allow scalability.

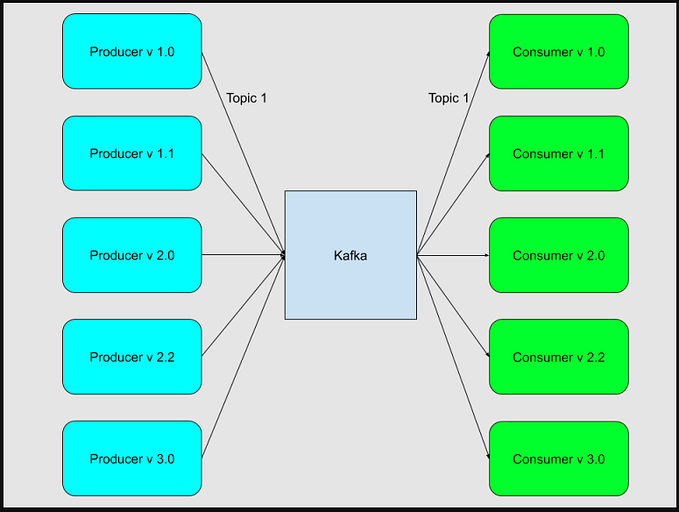

- Producer: A client application that sends messages (data) to Kafka topics.

- Consumer: A client application that reads messages from Kafka topics.

- Broker: A Kafka broker is a server that stores data (messages) in partitions of topics.

- Zookeeper: A centralized service that manages the coordination of Kafka brokers. It keeps track of which brokers belong to which clusters, handles leader elections, and maintains metadata about Kafka topics and partitions.

Now, let’s focus on the role of Kafka brokers and Zookeeper.

Kafka Broker Cluster: The Backbone of Kafka

What is a Kafka Broker?

A Kafka broker is a server that receives data from producers, stores it, and serves it to consumers when requested. A Kafka broker handles message storage, delivery, and replication. Each broker is assigned to manage one or more partitions of a topic.

Why Use Multiple Brokers?

Kafka’s strength comes from its ability to distribute data across multiple brokers. When you create a Kafka cluster, it consists of multiple brokers working together to handle higher throughput and fault tolerance. Here’s why you should use multiple brokers:

- Scalability: Multiple brokers allow Kafka to handle more data and serve more producers and consumers. Data is spread across brokers by dividing topics into partitions, each of which is managed by a separate broker.

- Fault Tolerance: If one broker fails, other brokers in the cluster can take over. Kafka replicates data across brokers, ensuring that data is not lost if one broker crashes.

- Load Balancing: Kafka automatically distributes partitions across brokers, ensuring that no single broker is overwhelmed with too much data. This improves overall performance.

How Brokers Work in a Cluster

In a Kafka cluster:

- Topics are divided into partitions, and each partition is stored on one or more brokers.

- Each partition has a leader broker that handles all read and write requests for that partition. Other brokers in the cluster may store a replica of the partition’s data.

- If the leader broker fails, Kafka uses Zookeeper to elect a new leader from the replicas.

Example:

Let’s say you have a Kafka topic with 4 partitions, and you have a cluster of 3 brokers. Kafka will distribute these partitions across the brokers:

- Broker 1 might manage Partition 0 and Partition 1.

- Broker 2 might manage Partition 2.

- Broker 3 might manage Partition 3.

This setup ensures that the workload is distributed evenly across brokers, and each broker only handles part of the data.

Zookeeper: Kafka’s Coordinator

What is Zookeeper?

Zookeeper is a centralized service used by Kafka for managing and coordinating the Kafka brokers. It keeps track of the state of the Kafka cluster, including:

- Broker Registration: Zookeeper knows which brokers are alive and part of the cluster.

- Leader Election: When a broker managing a partition fails, Zookeeper selects a new leader broker to take over.

- Metadata Management: Zookeeper maintains information about Kafka topics, partitions, and replicas.

Zookeeper plays a critical role in ensuring that the Kafka cluster is fault-tolerant and that brokers operate smoothly.

Why Use Multiple Zookeepers?

In production environments, running multiple Zookeeper nodes (called an ensemble) is essential for fault tolerance. If a single Zookeeper server goes down, the ensemble can continue to function as long as a majority of nodes are still running.

How Zookeeper and Kafka Work Together

Here’s how Kafka and Zookeeper interact:

- Broker Registration: When a Kafka broker starts up, it registers itself with Zookeeper. Zookeeper maintains a list of all active brokers in the cluster.

- Leader Election: For each partition, Zookeeper keeps track of the leader broker. If the leader broker crashes, Zookeeper elects a new leader from the replicas to take over.

- Cluster Metadata Management: Zookeeper stores the configuration and metadata for the Kafka cluster, including information about topics, partitions, and their replication.

- Consumer Offsets: Zookeeper can also store the offset at which a consumer is currently reading from a topic. However, in modern Kafka versions, Kafka stores offsets within its own topics instead of Zookeeper.

Setting Up a Kafka Cluster with Multiple Brokers and Zookeeper Nodes

Now, let’s look at how to set up a Kafka cluster with multiple brokers and multiple Zookeeper nodes.

Step 1: Set Up Zookeeper Nodes

In a production environment, you should set up a Zookeeper ensemble with at least 3 nodes for fault tolerance.

Configure Zookeeper on each node by editing the zoo.cfg file and specifying the list of Zookeeper servers:

tickTime=2000

dataDir=/var/lib/zookeeper

clientPort=2181

initLimit=5

syncLimit=2

server.1=zookeeper1:2888:3888

server.2=zookeeper2:2888:3888

server.3=zookeeper3:2888:3888Start Zookeeper on each node:

bin/zookeeper-server-start.sh config/zookeeper.propertiesStep 2: Set Up Kafka Brokers

Each broker needs its own unique configuration. Here’s how to configure multiple brokers:

Create separate configuration files for each broker (e.g., server1.properties, server2.properties).

Assign a unique broker.id for each broker in the configuration:

broker.id=1

listeners=PLAINTEXT://:9092

log.dirs=/var/lib/kafka

zookeeper.connect=zookeeper1:2181,zookeeper2:2181,zookeeper3:2181Start each broker using its configuration file:

bin/kafka-server-start.sh config/server1.propertiesStep 3: Verify the Cluster Setup

Once all brokers and Zookeeper nodes are running, you can verify the cluster setup by running:

bin/kafka-topics.sh --describe --topic my-topic --bootstrap-server localhost:9092This will show the partition distribution and replica assignment across brokers.

Conclusion

In this blog post, we explored how Kafka brokers and Zookeeper work together to provide scalability and fault tolerance in a Kafka cluster. By distributing data across multiple brokers and coordinating through Zookeeper, Kafka ensures high availability and reliability, even in the face of failures.

Understanding the architecture of Kafka’s broker cluster and Zookeeper is crucial for building robust, scalable, and resilient real-time data pipelines. Whether you’re a beginner or experienced Kafka user, this knowledge will help you design and manage Kafka clusters more effectively.

If you have any questions or need further clarification, feel free to leave a comment below. Happy streaming with Kafka!